Single-board computers (SBC) are both inexpensive and reliable; they are also very small. As such they make excellent 24x7 home servers. This guide steps you through the process of creating such a server.

This guide was originally tested on a Raspberry Pi 400, and then later on a Raspberry Pi 4b. The main difference it that the Pi 400 board is embedded in a small keyboard.

I also have another SBC, an ODROID still running Ubuntu LTS 16.04, and I will document it as well when I update it. I am not sure yet whether I will document it here, or as another mini-document. For now I am focusing on the Raspberry Pi.

One of my goals is to promote using the command-line to do most of the work. If you are interested in expanding your horizons and understanding more about the power of the command-line, then this guide is for you. There is also a lot of detail in this guide; this is my personal preference. I grow tired of the current trend to provide internet-searched answers in a few phrases to fit on the screen of a mobile phone.

Take a look at the table of contents at the top of the document. Some users will only be interested in creating a 24x7 local file-sharing (Samba) service at home – in that case you do not need to read beyond the section on ‘Backups’.

Subsequent chapters are more complex, so an interest in the command-line is really needed. Of course, people familiar with Linux and the command-line will skip some sections of this guide.

You should look into assigning a permanent network IP address for your server in your home network so that you can easily connect to it from any of your devices. Your home network router/WiFi modem should have the option to enable you to reserve an IP address for any device. You only need to know the hardware MAC address of your future server. There will be 2 MAC addresses - one for hardwired ethernet and one for wireless. You can reserve both interfaces until you decide which way you will connect your server to your router.

// There are a few ways to find the MAC addresses of your network devices under

// contemporary Linux distributions. One quick command-line method is to look

// in the '/sys/class/net/' sub-directory for the network devices:

$ ls /sys/class/net/*/address

/sys/class/net/eth0/address /sys/class/net/wlan0/address

/sys/class/net/lo/address

// On a Pi with Ubuntu installed the wired network device is 'eth0' and the

// wireless network device is 'wlan0'

$ cat /sys/class/net/eth0/address

e4:5f:01:a7:22:54

$ cat /sys/class/net/wlan0/address

e4:5f:01:a7:22:55This guide was created in the Markdown markup language (the Pandoc flavour of Markdown). Markdown is wonderfully simple. Then, using the versatile Pandoc command set, both HTML and PDF formats of the document were generated. In fact this document was created using the home server as a remote desktop. The server served as a git, web and NFS server; as well it served as a remote desktop for contemporary documentation creation.

The appendix of this document is rather large. The idea is to push some of the command-line and technical detail into the appendix. Thus the flow of the document covers the basics, encouraging the reader to see the bigger picture and to avoid being smothered in the detail.

In this guide command-line sessions appear in a pale-yellow box, using a customized .console Markdown syntax highlighting convention which I created for command-line input and output. Two kinds of simplified command-line prompts appear. As well, explanatory comments starting with two slashes are coloured blue:

// Usually your prompt would be more complex, something like this:

// myname@ubuntu:~/.vim/syntax$

// or like this:

// [desktop /tmp]$

// But I simplify its appearance when illustrating command-line sessions.

// Normal Users' command-line prompt, coloured 'green':

$ some-command

// The root superuser's prompt, simplifed also, and coloured 'red':

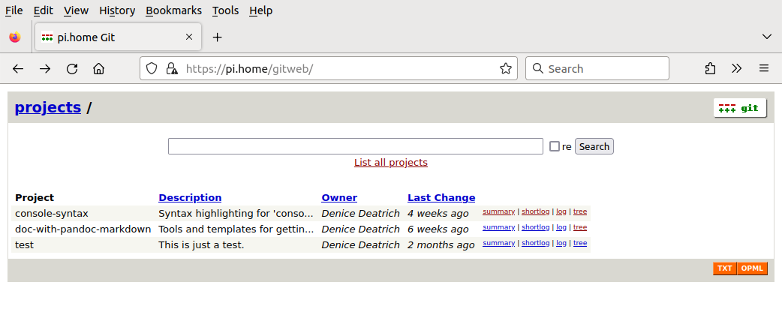

# some-other-commandFrom my experience creating this guide I added another git repository containing the examples of the Markdown documentation style and support files used in this guide.

Sometimes command output is long and/or uninteresting in the context of this guide. I might show such segments with a ellipsis (…)

Sometimes a double-exclamation (!!) mark may appear somewhere – this is only a reminder for myself to fix an issue at that point in the documentation. These reminders will eventually disappear.

I tend to use ‘ssh’ as a verb - it is not really correct to do this, but it really is a verb in my life. So I apologize if it annoys you.

If you discover issues with instructions in this document, or have other comments or suggestions then you can contact me on my github project page.

Though many Raspberry Pi owners run the Raspberry Pi OS (formerly known as Raspbian), in this guide I chose to use Ubuntu. Ubuntu LTS is a long-term support Debian-based Linux OS. Ubuntu is renowned for its desktop support, but it also provides a comfortable home server experience.

A server should be stable. We want to apply software updates, but we also want to avoid the need to update the major version of the base OS every year. The Official Gnome LTS releases with the Gnome desktop environment in the main software repository are supported for up to 5 years from the time of the initial release, and for up to 10 years with extended security-only updates via Ubuntu Advantage access1, also known as Expanded Security Maintenance (ESM).

Without ESM not all repositories get the same level of support. For example, the community-supported desktop environments located in the universe software repository only get best-effort maintenance from Canonical and the Ubuntu community. Nonetheless, most critical services are installed from the base repository, and thus have excellent functional and security support.

However with an ESM/Advantage/Pro account, then all packages get security updates for 10 years from initial release. This change was introduced in January of 2023.

As with all OS distributions the versions of major software stay with the initial release, and patches to the software are for bugs and security issues for those software versions.

Generally you should think about upgrading your server OS every few years so that you stay in touch with current technologies, and so that you benefit from newer software versions.

At the time of writing this guide I used version 22.04 of Ubuntu LTS (also known as Jammy Jellyfish). It was first released in April 2022, as indicated by the release number.

If you are a going to be using Ubuntu then it is wise to subscribe to a few mailing lists. There are lots of them, but a few low volume ones like these are a good idea:

I also opt to use an installation image which uses the MATE desktop system – at the bottom of that linked website is a note about why it is called MATE (pronounced mat-ay). The MATE window manager is intuitive, efficient, skinny, dependable and popular. It is widely available on most flavours of Linux. MATE is not flashy, but it gets the job done.

Even though we are creating a home server, it is useful to configure the server to provide a remote graphical desktop environment – this is why in this guide we use the desktop image rather than the server image. Then you can use the desktop for fun, learning, or perhaps as your Linux development environment from other devices. Accessing the desktop remotely is also documented in this guide.

This installation image still uses the X.org display server instead of Wayland, partly because it uses MATE which is not yet ready for Wayland at this Ubuntu LTS release, and also because this build is for the Raspberry Pi, where Wayland support is new. Moreover remote desktop support is a work in progress for Wayland environments, and is better left to the X.org protocol for now.

You will need a new or repurposed microSD card with a capacity of at least 32 GB – since this is a server we might want to store lots of photos or videos on it. See the Ubuntu MATE website for some examples of microSD cards. Recently I was able to buy a 256 GB Silicon Power microSD card for less than $25 Cdn on Amazon; this brand is rated highly for use on Raspberry Pi’s by testers like Tom’s Hardware.

Go to the Ubuntu MATE download website to download your image - for a Pi 4 generation with 4 or more GB of RAM the 64-bit ARM architecture (arm64) is best. For the version I used in March 2023 the image name was:

ubuntu-mate-22.04-desktop-arm64+raspi.img.xz.

There are many instructions available online to help you download the disk image and install it onto installation media - I will not reproduce the instructions here. How you create the image depends on your home computing device and its OS. There is a helpful tutorial on creating the installation image using the Raspberry Pi Imager software for 3 operating systems:

After installing the disk image on the microSD the disk partitioning looks like this using the fdisk command. I show it here so that you are aware of what is going on under the hood. Here is an example of a 256 GB microSD inserted into a USB card reader on another Linux computer where the card showed up as /dev/sde:

$ sudo fdisk -l /dev/sde

Disk /dev/sde: 231.68 GiB, 248765218816 bytes, 485869568 sectors

Disk model: FCR-HS3 -3

...

Disklabel type: dos

Disk identifier: 0x11d94b9e

Device Boot Start End Sectors Size Id Type

/dev/sde1 * 2048 499711 497664 243M c W95 FAT32 (LBA)

/dev/sde2 499712 12969983 12470272 5.9G 83 LinuxThere are 2 partitions; the first (/dev/sde1) is a small boot partition whose type is FAT32, and the second (/dev/sde2) is the minimal 6 GB Linux partition. Though this microSD is 256 GB, only the first 6 GB is currently used. The automatic installation process will expand the partition right to the maximum extend of its partition or of un-allocated space. Most Linux installation images allow you to choose your disk partitioning; the Raspberry Pi Ubuntu installation image does not.

However, it is possible and useful to modify the pre-installation partitioning directly on the microSD card as described in the appendix.

In the appendix I also provide a generic Linux command-line approach to downloading, un-compressing and writing the image to the microSD card. If you are not yet very familiar with the command-line then leave this exercise for a later time in your Linux adventure.

Once you have prepared your microSD card then insert it in your Raspberry Pi. Note that the card pushes in easily. It will only go in one way. On a Pi 400 you can eject it by gently pushing on it once and it will pop out enough to grab it. There is no need to pull on it to remove it because it essentially pops out. With a Pi 4b you will need to pull it out.

Turn the power on with the Pi connected to a monitor, USB keyboard and mouse. You will shortly see the firmware rainbow splash screen. Shortly after that there are a series of screens allowing you to customize the installation:

As the installation starts it will show some informational screens to entertain you while it installs. Eventually it will reboot and present you with the login screen. Once you login you will see the default MATE desktop configuration.

Before going further immediately update the software on the system. The MATE installation image is not released often, so it can be a bit behind the package update curve. As well, any final configuration issues will be updated.

Open a terminal window by selecting:

Application -> System Tools -> MATE Terminal

and enter the following commands:

// This will update the system's knowledge about what should be updated;

// in other works, the cache of software package names pending for update:

$ sudo apt update

// then update the software; the command is actually 'upgrade', which is odd,

// at least to me.. I like 'yum check-update' and 'yum update' much better...

$ sudo apt upgradeIt will take a while. Once finished there is one more update to do before you reboot the system – the Raspberry Pi bootloader EEPROM update, in case there are pending updates to apply:

// You can check the current state of firmware updates without being root.

// Here we see that the firmware is up-to-date, but the default bootloader

// could be set to use the latest firmware:

$ rpi-eeprom-update

*** UPDATE AVAILABLE ***

BOOTLOADER: update available

CURRENT: Thu 29 Apr 16:11:25 UTC 2021 (1619712685)

LATEST: Tue 25 Jan 14:30:41 UTC 2022 (1643121041)

RELEASE: default (/lib/firmware/raspberrypi/bootloader/default)

Use raspi-config to change the release.

VL805_FW: Using bootloader EEPROM

VL805: up to date

CURRENT: 000138a1

LATEST: 000138a1

// now we need to be root since we want to apply the update:

$ sudo rpi-eeprom-update -a

*** INSTALLING EEPROM UPDATES ***

...

EEPROM updates pending. Please reboot to apply the update.

To cancel a pending update run "sudo rpi-eeprom-update -r".Now reboot the server to get the newer kernel, and to complete the firmware update. On the far upper right taskbar, select the power button icon, and then select Switch Off -> Restart.

For people without much command-line experience it is important to get going at the command-line. When logged into the MATE desktop open a terminal window by selecting Application -> System Tools -> MATE Terminal.

Try some command-line examples in the appendix. Note that the up/down arrow keys can be used to recall your previous commands. You can edit and reuse an entry in your previous commands using the left/right arrow keys.

You will find that the ‘TAB’ key (shown below as <TAB>) is very useful for command-line completion.

Suppose you are going to use the command ‘timedatectl’. You start by typing the word ‘time’ and then hit the TAB key once, then again when you do not get a response. You will see 4 possible commands as shown below. Then to complete the command simply type d followed by another TAB and the full command will complete:

$ time<TAB><TAB>

time timedatectl timeout times

$ timed<TAB>

$ timedatectl Look online for some tutorials; there are millions of results on Google if you search for:

Linux "command line" tutorial for beginnersSee also the list of all commands used in this guide.

There are many Ubuntu-specific tutorials. You might want to check out these two:

We are going to create a Samba file sharing service on our server. Other devices like mobile phones, tablets, laptops and desktops running a variety of operating systems should be able to manage files in the designated data area.

We are not going to be really secure, in that we are allowing guest access. Presumably if you let your family and your guests connect to your network, then you would allow them to connect to your Samba server.

But as always, your internal home network should be well protected with at least a strong password for your wireless SSID connections.

First, visit Setting Up a Data Area in the appendix to find out how to create your data area.

Always use a sub-directory inside the data area to begin any new project. One of the advantages is that the lost+found directory does not become part of your project. For this Samba project we will create /data/shared.

For Samba the top level ownership of the samba area will be a user named ‘nobody’. This user is always created in Linux systems and has no login shell, so ‘nobody’ cannot log in. It is a safer user identity to use for guest access to Samba shares.

We set the access permissions using chmod2 and chown3.

$ cd /data

$ sudo mkdir shared

$ sudo chown nobody:nogroup shared

$ sudo chmod g+ws sharedHere are 3 example directories to create for differing purposes:

‘Music’, ‘Protected’ and ‘Test’

other examples might be ‘Videos’ and ‘Pictures’:

You will be able to create directories inside the shared area using your other devices as well.

I use the Test area initially for testing from various devices; that is, create and delete files in the test directory.

$ cd /data/shared

$ sudo mkdir Test

$ sudo chown nobody:nogroup Test

$ sudo chmod g+ws TestI like having a general ‘Protected’ area that others can access but cannot change. I use secure-shell access to that area for dumping files that I manage without using Samba tools.

$ cd /data/shared

$ sudo mkdir Protected

$ sudo chown myname:mygroup Protected

$ sudo chmod g+ws ProtectedAs an example I put my old Music files in ‘Music’ so that it could be accessed from various devices 24x7. You can either keep the permissions as nobody:nogroup, allowing other people in your home network to help manage the collection, or you can change ownership so that only you manage them locally. In this example my login name is ‘myname’ with group ‘mygroup’:

$ du -sh /data/shared/Music/

7.4G /data/shared/Music/

$ ls -la /data/shared/Music/

drwxr-sr-x 9 myname mygroup 4096 Apr 15 16:03 .

drwxrwsr-x 7 nobody nogroup 4096 Feb 26 11:59 ..

-rw-r--r-- 1 myname mygroup 108364 Feb 27 2022 all.m3u

drwxr-xr-x 9 myname mygroup 4096 Feb 26 2022 Celtic

-rw-r--r-- 1 myname mygroup 13373 Mar 6 2022 Celtic.m3u

...

drwxr-xr-x 5 myname mygroup 4096 Feb 27 2022 Nostalgia

-rw-r--r-- 1 myname mygroup 6145 Feb 27 2022 Nostalgia.m3u

drwxr-xr-x 13 myname mygroup 4096 Feb 26 2022 Pop

-rw-r--r-- 1 myname mygroup 10065 Feb 27 2022 Pop.m3u

drwxr-xr-x 39 myname mygroup 4096 Feb 26 2022 Rock

-rw-r--r-- 1 myname mygroup 41656 Feb 27 2022 Rock.m3uSimply install the samba package; apt will pull in any dependencies:

$ sudo apt install samba

...

0 upgraded, 21 newly installed, 0 to remove and 3 not upgraded.

Need to get 7,870 kB of archives.

After this operation, 44.1 MB of additional disk space will be used.

Do you want to continue? [Y/n]

...The main configuration file is:

/etc/samba/smb.conf

The file is organized into sections:

Here are the specifics:

The modified smb.conf file is in github.

$ cd /etc/samba

$ sudo cp -p smb.conf smb.conf.orig

$ sudo nano smb.conf

// The 'diff' command shows differences in snippets with the line numbers

// A more elegant way to see the differences would be side-by-side:

// diff --color=always -y smb.conf.orig smb.conf | less -r

$ diff smb.conf.orig smb.conf

29c29,30

< workgroup = WORKGROUP

---

> # workgroup = WORKGROUP

> workgroup = LINUX

33a35,39

> # for Apple SMB clients

> fruit:nfs_aces = no

> fruit:aapl = yes

> vfs objects = catia fruit streams_xattr

>

62a69,70

> log level = 1 passdb:3 auth:3

>

241a250,259

>

> [home]

> comment = Samba on Raspberry Pi

> path = /data/shared

> writable = yes

> read only = no

> browsable = yes

> guest ok = yes

> create mask = 0664

> directory mask = 0775$ sudo systemctl restart smbd nmbd

$ systemctl status smbd | grep Status:

Status: "smbd: ready to serve connections..."

$ systemctl status nmbd | grep Status:

Status: "nmbd: ready to serve connections..."

//

$ cd /var/log/samba

$ ls -ltr

total 2168

drwx------ 5 root root 4096 May 7 09:45 cores/

-rw-r--r-- 1 root root 369 May 7 10:03 log.desktop

-rw-r--r-- 1 root root 0 May 7 10:08 log.ubuntu

-rw-r--r-- 1 root root 9000 May 7 10:51 log.192.168.1.82

$ tail log.192.168.1.82

[2023/05/07 10:51:01.059310, 3] ../../source3/auth/auth.c:201(auth_check_ntlm_password)

check_ntlm_password: Checking password for unmapped user ... with the new password interface

[2023/05/07 10:51:01.059391, 3] ../../source3/auth/auth.c:204(auth_check_ntlm_password)

check_ntlm_password: mapped user is: [LINUX]\[guest]@[UBUNTU]Tests to run to validate functionality include the following:

Testing will depend on your device and client.

Suppose you have an Android phone. Download the App named Cx File Explorer from your App Store. Under its Network tab you can open a ‘remote’ Samba share in your home network. You enter in the IP address of the Pi server and select ‘Anonymous’ as the user instead of user/pass.

(!! get an example from Windows and from an iphone)

Suppose you have a MATE desktop session on your Pi server or on another Linux device. Open a file browser:

Applications -> Accessories -> Files

The Files browser ‘File’ menu has an option: Connect to Server. If you have an older version of Mate then find the help option and search for ‘Connect to Server’.

A small connection window pops up. It is a bit annoying, so select any options that allow the file browser to remember your entries, and also create a bookmark.

There is no Samba password for ‘guest’, but the connection window will want one anyway; so give it the password ‘guest’ to make it happy.

At this point an application named seahorse might pop up. It is the GNOME encryption interface, and you can store passwords and keys in it. I don’t use it, but you might want to for this Samba share. You can always cancel the seahorse window.

For the connection request, fill in this data:

and finally connect.

Always, always, do some kind of backups on your server. For system backups, very little actually needs to be backed up, yet it is important to get into a frame of mind where you think about these things. Let’s look at what you should back up on your server, and how you might do it.

There is no need to back up everything - you can always reinstall and reconfigure. This is my favourite list of system directories to back up:

If you are playing with database services then you need to inform yourself which directories and/or data exports should be added and/or used for backups. Note that when you have created a Samba or an NFS server you will have other data directories to back up, and these directories might be large.

A backup process and a link to an example backup script are in the appendix. We look at backing up both system and data directories, including the backup of large directories. Compressed system directories are typically small. But /home and /data/shared might be large. Be aware of your space needs, and adjust backups accordingly.

Recovering files from backups is fairly easy. It is best to use an empty directory with adequate space. In this example we recover files from compressed tar files. We unpack the tar files etc.tgz and home.tgz in the empty directory, and then move or copy any files into place in the file system. Example:

// This example unpacks home.tgz and etc.tgz. Do this with sudo so that

// files are unpacked with the correct permissions.

$ sudo mkdir /var/local-recovery

// We can copy files from the local backup tree: /var/local-backups/

// or from the external drive once we mount it: /mnt/backups/

// So use the needed pathname instead of '/path/to/' below:

$ sudo cp -ip /path/to/home.tgz /path/to/etc.tgz /var/local-recovery/

$ cd /var/local-recovery

$ sudo tar -zxf etc.tgz

$ sudo tar -zxf home.tgz

$ sudo rm etc.tgz home.tgz

$ ls -l

drwxr-xr-x 152 root root 12288 Jun 1 10:56 etc

drwxr-xr-x 3 root root 4096 May 25 10:45 home

$ ls -l home/

drwxr-x--- 25 myname myname 4096 Jun 1 21:12 mynameIf you need to recover files from large directory backups then you can copy the files directly from the removable media to your target directories. For large directory backups we do not compress the backed up files, since it takes some time and may introduce an additional disk space problem.

// This example recovers a directory inside the large directory backup of

// /home. First mount the USB drive -- the example partition here is at

// /dev/sda1:

$ sudo mount /dev/sda1 /mnt

$ cd /mnt/rsyncs

$ ls

0 3 5 copy

$ cd 0/home/myname/

$ ls

bin doc downloads etc git icons inc lib src

// Since the files are my files then I do not need to use 'sudo'.

// Here I am recovering my 'bin' directory.

// Note that I copy it to a different directory name so that I have the

// option of comparing any existing 'bin' directory in my home.

$ cp -a bin ~/bin.recovered

// Change directories away from the USB drive so that you can unmount it.

// You cannot unmount a file system if you are parked in it:

$ cd

$ sudo umount /mnt

$ pwd

/home/myname

$ diff -r bin bin.recoveredHere is a list of tasks you can apply to your server for 24x7 service. Ubuntu installations are more common on laptops and desktops which are often connected via wireless, are turned on and off frequently, and have a lot of software configuration not usually present or needed on a server.

The main objective here is to show you some options that reduce complexity and memory consumption, and might improve security and reliability. You can always circle back here in the future and try them, or just try any one topic which interests you.

By all means, ignore all of this if you don’t want to be bothered with disabling extraneous software. I have spent 3 decades managing UNIX and Linux systems, so I can be a bit picky about what runs on my systems.

If you won’t be using it on your server then turn Bluetooth off.

The Pi does not have a BIOS like personal computers do; instead configuration changes to enable or disable devices are made in the configuration file config.txt in the directory /boot/firmware/

You will need to eventually reboot the server once you have made this change. If you also disable WiFi then wait until you have finished the next task, or any other tasks in this chapter.

// List bluetooth devices:

$ hcitool dev

Devices:

hci0 E4:5F:01:A7:22:56

// disable bluetooth services running on the Pi

$ sudo systemctl disable blueman-mechanism bluetooth

// Always save a copy of the original file with the 'cp' command:

$ cd /boot/firmware

$ sudo cp -p config.txt config.txt.orig

// Disable bluetooth in config.txt by adding 'dtoverlay=disable-bt' at the end

$ sudo nano config.txt

// Use the 'tail' command to see the end of the file:

$ tail -3 config.txt

dtoverlay=disable-btIf you will use the built-in ethernet interface for networking on your server then turn WiFi off. I prefer wired connections for servers, especially since newer technology offers gigabit speed ethernet. In my experience, the network latency is usually better to wired devices. But if you prefer to keep the server on wireless then skip this task.

You will need to reboot the server once you have made this change, but remember to connect the ethernet cable on the Pi to your home router first!

// List wireless devices - after making this change you will not see this

// information:

$ iw dev

phy#0

Unnamed/non-netdev interface

wdev 0x2

addr e6:5f:01:a7:71:0d

type P2P-device

txpower 31.00 dBm

Interface wlan0

ifindex 3

wdev 0x1

addr e4:5f:01:a7:22:55

ssid MYNET

type managed

channel 104 (5520 MHz), width: 80 MHz, center1: 5530 MHz

txpower 31.00 dBm

// Disable wireless in config.txt by adding 'dtoverlay=disable-wifi' at the end

$ cd /boot/firmware

$ sudo nano config.txt

// Use the 'tail' command to see the end of the file:

# tail -3 config.txt

dtoverlay=disable-bt

dtoverlay=disable-wifi

// After rebooting the Pi disable the wireless authentication service

$ sudo systemctl stop wpa_supplicant

$ sudo systemctl disable wpa_supplicantSBC’s like the Raspberry Pi do not have a real-time clock (RTC), whereas more complex systems like desktops and laptops do.

You can buy an RTC for the Pi, or you can install a package that fakes some of the functionality of a hardware clock:

$ sudo apt install fake-hwclock

...

Setting up fake-hwclock (0.12) ...

Created symlink /etc/systemd/system/sysinit.target.wants/fake-hwclock.service ...

update-rc.d: warning: start and stop actions are no longer supported; falling back to defaults

// The package complains about unsupported start/stop actions, so it does

// not start the fake clock; simply start it yourself:

$ systemctl status fake-hwclock

fake-hwclock.service - Restore / save the current clock

Loaded: loaded (/lib/systemd/system/fake-hwclock.service; enabled; vendor >

Active: inactive (dead)

Docs: man:fake-hwclock(8)

$ sudo systemctl start fake-hwclockWithout this package you will notice that the timestamps at startup are wildly wrong. For example when you look at snippets of the ‘last’ logged in users, the logs about reboots are always odd, but once you have installed the fake clock then reboot times are in line with real world time:

// without fake-hwclock:

$ last | less

...

myname pts/1 desktop.home Wed Jul 12 14:34 - 15:51 (01:17)

myname pts/0 desktop.home Wed Jul 12 14:28 - 14:46 (00:17)

reboot system boot 5.15.0-1033-rasp Mon Mar 20 08:33 - 09:30 (115+00:56)

myname pts/4 desktop.home Tue Jul 11 18:13 - 23:44 (05:31)

myname pts/2 desktop.home Tue Jul 11 16:29 - 18:21 (01:52)

myname pts/2 desktop.home Tue Jul 11 16:10 - 16:24 (00:14)

// with fake-hwclock:

...

root pts/0 ubuntu.home Wed Jul 26 10:39 still logged in

reboot system boot 5.15.0-1034-rasp Wed Jul 26 10:38 still running

myname pts/2 desktop.home Wed Jul 26 10:37 - 10:37 (00:00)

root pts/1 ubuntu.home Wed Jul 26 10:15 - down (00:23)

myname pts/0 desktop.home Wed Jul 26 10:11 - 10:38 (00:27)Maybe like me you like seeing informational messages as a computer boots up. In that case you need to edit /boot/firmware/cmdline.txt and remove the quiet argument. On my system this one line file now ends in:

‘… fixrtc splash’

instead of

‘… fixrtc quiet splash’:

$ cd /boot/firmware

$ sudo cp -p cmdline.txt cmdline.txt.orig

$ sudo nano cmdline.txtSimpler is better for a server. Normally 24x7 servers are headless, mouseless, keyboardless, and sit in the semi-darkness. A graphics-based console is therefore useless. Though your home server might not be as lonely as a data centre server, you might want to try a text-based console:

$ sudo systemctl set-default multi-user

$ sudo systemctl stop display-manager

// If you do have a mouse and a screen attached then you can still make

// the mouse work in a text console login -- it can be useful. At work

// I have sometimes used the mouse at a console switch to quickly copy and

// paste process numbers for the kill command.

$ sudo apt install gpm

$ sudo systemctl status gpmUbuntu promotes another kind of software packaging called Snaps (which includes an App store). Some users are not pleased with issues introduced by the underlying support software, and decide to delete Snap support from their systems. In my early testing of Ubuntu LTS 22.04 I also ran into the problem of firefox not able to start, and like others I traced it back to snap (firefox is installed from a Snap package).

My opinion is that ‘Snaps’ are not meant for a server environment, and so I remove the associated software. I certainly find it distasteful to have more than a dozen mounted loop devices cluttering up output of block device commands for a handful of snap packages. I would rather free up the memory footprint and inodes for other purposes.

But if you like ‘Snaps’ then skip to the next topic.

Here is a quick summary on removing Snap support, that is, all snap packages and the snapd daemon:

You should close firefox if it is running in a desktop setting. Then disable the snapd services and socket:

$ sudo systemctl disable snapd snapd.seeded snapd.socketList the Snap packages installed, and then delete them. Leave base and snapd packages until the end. As noted in Erica’s instructions remove packages one at a time and watch for messages warning about dependencies. This is my list of Snap packages; your list might be different depending on what you have installed:

$ snap list

Name Version ... Publisher Notes

bare 1.0 ... canonical base

core20 20230404 ... canonical base

core22 20230404 ... canonical base

firefox 112.0.2-1 ... mozilla -

gnome-3-38-2004 0+git.6f39565 ... canonical -

gnome-42-2204 0+git.587e965 ... canonical -

gtk-common-themes 0.1-81-g442e511 ... canonical -

snapd 2.59.2 ... canonical snapd

snapd-desktop-integration 0.9 ... canonical -

software-boutique 0+git.0fdcecc ... flexiondotorg classic

ubuntu-mate-pi 0+git.0f0bcdf ... ubuntu-mate -

ubuntu-mate-welcome 22.04.0-a59036a6 ... flexiondotorg classic

$ sudo snap remove firefox

$ sudo snap remove software-boutique

$ sudo snap remove ubuntu-mate-welcome

$ sudo snap remove ubuntu-mate-pi

$ sudo snap remove snapd-desktop-integration

$ sudo snap remove gtk-common-themes

$ sudo snap remove gnome-42-2204

$ sudo snap remove gnome-3-38-2004

$ sudo snap remove core22

$ sudo snap remove core20

$ sudo snap remove bare

$ sudo snap remove snapd

$ snap list

No snaps are installed yet. Try 'snap install hello-world'.Completely remove snapd and its cache files from the system.

$ sudo apt autoremove --purge snapd

$ sudo rm -rf /root/snap

$ rm -rf ~/snapAs well, you can clean up ‘PATH’ environment variables and remove ‘snap’ from them. I also take the opportunity to fix issues that bother me. I have never overly trusted binaries in /usr/local/ (having supported them in the old commercial UNIX world) so I either push those paths to the end, or I remove them. I know that all directories in /usr/local/ are empty anyway, so I remove them:

$ cd /etc

$ sudo cp -p environment environment.orig

$ sudo nano environment

$ diff environment.orig environment

1c1

< PATH="/usr/local/sbin:/usr/local/bin:/usr/sbin:...:/usr/local/games:/snap/bin"

---

> PATH="/usr/sbin:/usr/bin:/sbin:/bin:/usr/games"

$ sudo cp -p manpath.config manpath.config.orig

$ sudo nano manpath.config

$ diff manpath.config.orig manpath.config

22d21

< MANDATORY_MANPATH /usr/local/share/man

33,36d31

< MANPATH_MAP /usr/local/bin /usr/local/man

< MANPATH_MAP /usr/local/bin /usr/local/share/man

< MANPATH_MAP /usr/local/sbin /usr/local/man

< MANPATH_MAP /usr/local/sbin /usr/local/share/man

68,69d62

< MANDB_MAP /usr/local/man /var/cache/man/oldlocal

< MANDB_MAP /usr/local/share/man /var/cache/man/local

72d64

< MANDB_MAP /snap/man /var/cache/man/snapThen add configuration files for apt access to firefox dpkg-based packages. Finally install firefox from the Mozilla Personal Package Archive (PPA):

// Create the necessary apt configurations for firefox:

$ sudo nano /etc/apt/preferences.d/firefox-no-snap

$ cat /etc/apt/preferences.d/firefox-no-snap

Package: firefox*

Pin: release o=Ubuntu*

Pin-Priority: -1

$ sudo add-apt-repository ppa:mozillateam/ppa

...

PPA publishes dbgsym, you may need to include 'main/debug' component

Repository: 'deb https://ppa.launchpadcontent.net/.../ppa/ubuntu/ jammy main'

Description:

Mozilla Team's Firefox stable + 102 ESR and Thunderbird 102 stable builds

Support for Ubuntu 16.04 ESM is included.

...

// Install firefox

$ sudo apt install firefoxThere is a big 1 GB swapfile in the root of the file system - I find that offensive, so I moved it. If you are not as easily offended as I am then skip this topic.

It is a good idea to have some kind of swap enabled, since swap is only used if too much memory is being consumed by processes. Once memory is low the system will start using any configured swap on disk. Of course this is slower than memory, but it is better to use some swap at those moments instead of having an unfortunate process die because of an out-of-memory condition.

Over time if you never see swap being used then you could turn swap off and delete the swap file.

Here we create another 1 GB file – it can be much larger if needed; but if you need a lot of swap then you should investigate to see what is eating memory.

// check to see what the current swap usage is; in this case it is 0

$ free -t

total used free shared buff/cache available

Mem: 3881060 176900 3022600 5332 681560 3541748

Swap: 1048572 0 1048572

Total: 4929632 176900 4071172

// Look at what systemd does with swap, and turn off the appropriate items

$ systemctl list-unit-files | grep swap

mkswap.service disabled enabled

swapfile.swap static -

swap.target static -

// There will be a .swap rule for every swap file - this one is for /swapfile

// and we want to get rid of it

$ sudo systemctl mask swapfile.swap

Created symlink /etc/systemd/system/swapfile.swap → /dev/null.

// Even though the 'mkswap' service is by default disabled, I also

// mask it so that it doesn't come back from the dead - because it

// will come back if you don't also mask that service

$ sudo systemctl mask mkswap.service

Created symlink /etc/systemd/system/mkswap.service → /dev/null.

// turn current swap off so we can delete the old file

$ sudo swapoff -a

$ sudo rm /swapfile

// Create an new swapfile in a subdirectory

$ sudo mkdir /swap

$ sudo fallocate -l 1G /swap/swapfile

$ sudo mkswap /swap/swapfile

Setting up swapspace version 1, size = 1024 MiB (1073737728 bytes)

no label, UUID=3e64d157-6f09-48d1-94c2-3851b82a73b7

// Protect the swap file and add it to /etc/fstab

$ sudo chmod 600 /swap/swapfile

$ sudo nano /etc/fstab

$ grep swap /etc/fstab

/swap/swapfile none swap defaults 0 0

// Turn swap back on and check

$ sudo swapon -a

$ swapon

NAME TYPE SIZE USED PRIO

/swap/swapfile file 1024M 0B -2

// Note that systemd will show a new 'swap' type named 'swap-swapfile.swap'

$ systemctl --type swap

UNIT LOAD ACTIVE SUB DESCRIPTION

swap-swapfile.swap loaded active active /swap/swapfileUNIX and Linux has a mechanism called cron allowing servers to run commands at specific times and days. However personal and mobile computing is typically not powered on all the time. So operating systems like Linux have another mechanism called anacron which tries to run periodic cron-configured commands while the computer is still running. Since we are creating a 24x7 server we do not also need anacron – delete it:

$ sudo apt remove anacron

$ sudo apt purge anacronHere are some services which normally can be disabled. Of course, if any of these services are interesting to you then keep them. Note that server processes are sometimes called daemons.

The systemctl command can handle multiple services at the same time, but doing them individually allows you to watch for any feedback. You can also simply disable these services without stopping them. They will not run on the next reboot.

// If you want to run a series of commands as root you can sudo to the bash

// shell, run your commands, and then exit the shell. Be careful to

// always exit immediately after running your commands.

$ sudo /bin/bash

// disable serial and bluetooth modems or serial devices

# systemctl stop ModemManager

# systemctl disable ModemManager

# systemctl stop hciuart

# systemctl disable hciuart

// disable VPN and printing services - you can print without running

// a local printer daemon (!!maybe document using one though )

# systemctl stop openvpn

# systemctl disable openvpn

# systemctl stop cups-browsed cups

# systemctl disable cups-browsed cupsNote that it is possible to enable some form of secure boot with OTP (One Time Programmable Memory) on a Raspberry Pi 4, but it is not yet for the faint of heart.

// disable System Security Services Daemon (sssd) if you don't need it

# systemctl disable sssd

// Disable UEFI Secure Boot (secureboot-db)

// To be sure, install mokutil and take a look:

# apt install mokutil

# man mokutil

// --sb-state means: Show SecureBoot State

# mokutil --sb-state

EFI variables are not supported on this system

# systemctl status secureboot-db

- secureboot-db.service - Secure Boot updates for DB and DBX

Loaded: loaded (/lib/systemd/system/secureboot-db.service; enabled; vendor>

Active: inactive (dead)

# systemctl disable secureboot-db

# apt autoremove --purge secureboot-db

// disable whoopsie and kerneloops if you don't want to be sending

// information to outside entities

# systemctl stop kerneloops

# systemctl disable kerneloops

# systemctl stop whoopsie

# systemctl disable whoopsie

# apt remove whoopsie kerneloops

# apt purge whoopsie kerneloops

// If you want to disable other apport-based crash reporting then remove apport

// from your server:

# apt autoremove --purge apport

// Apparmor (like SELinux) provides another layer of security to systems.

// If you are new to Linux you should keep it around to learn about it.

// For a home server I think it can be disabled; you must previously have

// removed 'Snaps':

# aa-status

apparmor module is loaded.

50 profiles are loaded.

41 profiles are in enforce mode.

...

# aa-teardown

Unloading AppArmor profiles

# aa-status

apparmor module is loaded.

# systemctl disable apparmor

...

Removed /etc/systemd/system/sysinit.target.wants/apparmor.service.

# apt autoremove --purge apparmor

dpkg: warning: directory '/etc/apparmor.d/abstractions/ubuntu-browsers.d'

not empty so not removed

# cd /etc

root@pi:/etc# mv apparmor.d apparmor.d.oldIf you prefer to see time in 24 hour format, or if you prefer to tweak other locale settings, then use localectl to set global locale settings.

In this example the locale setting is generic English with a region code for Canada. Because the British English locale uses a 24 hour clock then changing only the time locale will show datestrings with a 24 hour clock:

// Show your current locale settings

$ locale

LANG=en_CA.UTF-8

LANGUAGE=en_CA:en

LC_CTYPE="en_CA.UTF-8"

LC_NUMERIC="en_CA.UTF-8"

LC_TIME=en_CA.UTF-8

LC_TIME=en_GB.UTF-8

...

// What the date is in the current (Canadian) locale

$ date

Thu 11 May 2023 08:43:57 AM MDT

// What the date would look like if (American) en_US.UTF-8 were used:

$ LC_TIME=en_US.UTF-8 date

Thu May 11 08:45:18 AM MDT 2023

// What the date would look like if (British) en_GB.UTF-8 were used:

$ LC_TIME=en_GB.UTF-8 date

Thu 11 May 08:44:00 MDT 2023

// Change it to the British style. The change is immediate, but since

// you inherit the older locale environment at login, then you will not see

// the change until you logout, and then back in.

$ sudo localectl set-locale LC_TIME="en_GB.UTF-8"Ubuntu installs a log rotation package which controls how log files are rotated on your server. This package typically once a week compresses log files to a different name in /var/log/ and truncates the current log. The resulting files are rotated through a specified rotation, and the oldest compressed log is deleted; for example here are 4 weeks worth of rotated auth.log files:

$ ls -ltr /var/log/ | grep auth.log

-rw-r----- 1 syslog adm 3498 Apr 15 23:17 auth.log.4.gz

-rw-r----- 1 syslog adm 8178 Apr 22 23:17 auth.log.3.gz

-rw-r----- 1 syslog adm 7225 Apr 30 01:09 auth.log.2.gz

-rw-r----- 1 syslog adm 58810 May 7 00:22 auth.log.1

-rw-r----- 1 syslog adm 46426 May 11 09:17 auth.logA better scheme is to use the ‘dateext’ option in /etc/logrotate.conf so that older compressed logs keep their compressed and dated names until they are deleted:

$ ls -ltr /var/log/ | grep auth.log

-rw-r----- 1 syslog adm 3287 Apr 17 07:30 auth.log-20230417.gz

-rw-r----- 1 syslog adm 2494 Apr 23 07:30 auth.log-20230423.gz

-rw-r----- 1 syslog adm 4495 May 1 07:30 auth.log-20230501.gz

-rw-r----- 1 syslog adm 35715 May 7 07:30 auth.log-20230507

-rw-r----- 1 syslog adm 29500 May 11 09:55 auth.logTo make this change /etc/logrotate.conf is modified. We also set the number of rotations to keep to 12 weeks instead of 4 weeks. Note that per-service log file customization is possible; look at examples in /etc/logrotate.d/

$ cd /etc

$ sudo cp -p logrotate.conf logrotate.conf.orig

$ sudo nano logrotate.conf

$ diff logrotate.conf.orig logrotate.conf

13c13,14

< rotate 4

---

> #rotate 4

> rotate 12

19c20

< #dateext

---

> dateextNormally you get your network configuration from your home router via its DHCP service. If you are using a wired connection then consider statically configuring the IP address information on your server – there is a description of the process in the appendix in case you want to see how it is done.

By default the unattended-upgrades package is installed on Ubuntu LTS, and it is configured to run in an unattended manner. Its configuration files are in /etc/apt/apt.conf.d/, and the log files are in /var/log/unattended-upgrades/.

In particular the log files /var/log/unattended-upgrades-dpkg.log* contain the list of all upgraded packages:

// list files in time reverse order, then for each file find the date & package

$ ls -tr unattended-upgrades-dpkg.log* | \

while read f ; do \

echo "Log File $f:" \

zegrep '^Log started:|^Unpacking ' $f \

echo ""; \

done

Log File unattended-upgrades-dpkg.log.1.gz:

Log started: 2023-05-10 06:05:40

Unpacking librados2 (17.2.5-0ubuntu0.22.04.3) over (17.2.5-0ubuntu0.22.04.2) ...

Log started: 2023-05-10 06:05:52

Unpacking libcephfs2 (17.2.5-0ubuntu0.22.04.3) over (17.2.5-0ubuntu0.22.04.2) ...

Log started: 2023-05-10 06:06:03

Unpacking libfreetype6:arm64 (2.11.1+dfsg-1ubuntu0.2) over (2.11.1+dfsg-1ubuntu0.1) ...

Log File unattended-upgrades-dpkg.log-20230601.gz:

Log started: 2023-05-12 06:40:40

Unpacking linux-modules-5.15.0-1028-raspi (5.15.0-1028.30) ...

Unpacking linux-image-5.15.0-1028-raspi (5.15.0-1028.30) ...

Unpacking linux-modules-extra-5.15.0-1028-raspi (5.15.0-1028.30) ...

Unpacking linux-modules-extra-raspi (5.15.0.1028.25) over (5.15.0.1027.24) ...

Log started: 2023-05-12 06:42:58

Unpacking linux-image-raspi (5.15.0.1028.25) over (5.15.0.1027.24) ...

Log started: 2023-05-12 06:43:10

Log started: 2023-05-17 06:28:44

Unpacking linux-libc-dev:arm64 (5.15.0-72.79) over (5.15.0-71.78) ...

...If you are accustomed to monitoring and applying patches yourself, then you can delete the automatic update infrastructure, and do the patching yourself. Many work-place servers cannot be automatically updated without some risk, so if you support Linux at work you are probably used to configuring and managing your own software update policy.

If you decide to manage updates yourself then you could remove this package and its components. Of course, you should not do this until you are sure that you will follow through with doing your own updates:

$ systemctl status unattended-upgrades

Loaded: loaded (/lib/systemd/system/unattended-upgrades.service; enabled

...

$ sudo apt remove update-notifier-common unattended-upgrades

...

The following packages will be REMOVED:

ubuntu-release-upgrader-gtk unattended-upgrades update-manager update-notifier

update-notifier-common

...

$ sudo systemctl disable apt-daily-upgrade.timer apt-daily.timer

Removed /etc/systemd/system/timers.target.wants/apt-daily.timer.

Removed /etc/systemd/system/timers.target.wants/apt-daily-upgrade.timer.Regardless of whether you have registered for and configured the free ESM update program or not, you might get tired of seeing your terminal windows with any kind of messages.

One way to get rid of messages is to comment out these message properties in PAM (Pluggable Authentication Modules) configuration files. In the example session below I comment out dynamic motd (message of the day) and last login messages in 2 modules: sshd and login

$ cd /etc/pam.d

$ sudo cp -p sshd sshd.orig

// comment out the dynamic motd configuration

$ sudo nano sshd

$ diff sshd.orig sshd

33c33

< session optional pam_motd.so motd=/run/motd.dynamic

---

> #session optional pam_motd.so motd=/run/motd.dynamic

$ sudo cp -p login login.orig

// comment out the dynamic motd configuration and the last login configuration

$ sudo nano login

$ diff login.orig login

33c33

< session optional pam_motd.so motd=/run/motd.dynamic

---

> #session optional pam_motd.so motd=/run/motd.dynamic

82c82

< session optional pam_lastlog.so

---

> #session optional pam_lastlog.so

// For sshd, you need to edit /etc/ssh/sshd_config and set 'PrintLastLog' to no

// We won't do that here; instead we will do it in the secure-shell chapter.The other kind of optional pam session type of motd will still be displayed in your terminal window whenever you create a file named /etc/motd, since we did not comment it out.

$ grep -h motd /etc/pam.d/login /etc/pam.d/login | grep -v '^#'

session optional pam_motd.so noupdate

session optional pam_motd.so noupdateIt is unlikely that you will create a message that way for yourself. However, it is an interesting idea to drop an information file named /etc/motd on your server if your daily backups fail …

// Somewhere in your script you have:

echo "Your backups failed at `date`" >>/etc/motd

// then when you log in the next day:

$ ssh pi.home

Your backups failed at Fri 28 Jul 01:19:45 MDT 2023 The Secure Shell daemon, sshd, is a very useful and important service for connecting between computers near or far. If you are never going to connect via SSH into your Pi home server from within your network then do NOT install the daemon. You can always use the secure shell client, ssh, to initiate a connection to some external server – for that you do not need sshd.

If you will be needing sshd then first install it, since it is not installed by default in the LTS desktop version:

$ sudo apt install openssh-serverIf you will be using ssh to connect to any local Linux systems, then think about configuring your local area network (LAN) to suit your taste. There is an explanation in the appendix.

There are some sshd configuration issues that I like to fix in the secure shell daemon’s configuration file: /etc/ssh/sshd_config. The issues to fix are:

$ cd /etc/ssh

$ sudo cp -p sshd_config sshd_config.orig

// edit the file:

$ sudo nano sshd_config

$ diff sshd_config.orig sshd_config

15a16

> AddressFamily inet

95a97

> PrintLastLog no

101a104

> UseDNS yes

122a126,131

>

> # Limit access to root user; only local users can connect via ssh

> # to the root user, but only if root's authorized_keys file allows them.

> # note: using @localhost does not work on ubuntu unless you set 'UseDNS' to yes

> AllowUsers myname@192.168.1.* *@localhost

>

// Another option is to also allow root access to this server from your Linux

// desktop (eg: 192.168.1.65). Then the 'AllowUsers' configuration would

// look like this:

AllowUsers myname@192.168.1.* root@192.168.1.65 *@localhostIf you use the Linux command-line for work between computers you soon understand the usefulness of ssh. Here we go through the exercise of creating an ssh key pair so that you can connect securely between devices.

We use ssh-keygen to create the key pair. You should treat the private key carefully, distributing it to your desktop systems only. You can copy your public key to remote hosts, where you create an authorized_keys file that specifies which public keys are allowed to connect without using a standard system password.

Over time the Secure Shell key types have changed. Some key types are no longer considered secure, like SSH-1 or DSA keys. Here we will use an SSH RSA-based key with a key size of 4096 bits. Always use a strong passphrase. It is not like a password since you have more liberty to use combinations of characters; as the man-page on ssh-keygen says:

A passphrase is similar to a password, except it can be a phrase with a series of words, punctuation, numbers, whitespace, or any string of characters you want.

I would not create a passphrase that is less than 16 characters; I would certainly never set an empty passphrase.

// If you do not yet have a .ssh directory in your home directory then

// create one now; and give access to yourself only:

$ cd

$ mkdir .ssh

$ chmod 700 .ssh

// Generate the key:

$ ssh-keygen -t rsa -b 4096

Generating public/private rsa key pair.

Enter file in which to save the key (/home/myname/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/myname/.ssh/id_rsa.

// The private key is named 'id_rsa' and the public key is named 'id_rsa.pub'

// Note the permissions of these 2 files; the private key is protected

// and is read-write only to the owner.

$ ls -l ~/.ssh/id_rsa ~/.ssh/id_rsa.pub

-rw------- 1 myname myname 3326 May 2 22:34 /home/myname/.ssh/id_rsa

-rw-r--r-- 1 myname myname 746 May 2 22:34 /home/myname/.ssh/id_rsa.pubBe sure to back-up important directories like $HOME/.ssh – in your home environment it might not seem important, but once you start using your ssh keys for access to external resources then you should follow good practices. If you lose the private key then you will need to generate a new key pair.

Suppose you created your keys on your desktop, and you want to use them to ssh to your Linux home server without using a standard password. To do this you create an authorized keys file on the server:

// secure-copy your public key to the linux server (assuming the server is named 'pi')

$ scp ~/.ssh/id_rsa.pub myname@pi:~/

The authenticity of host 'pi (192.168.1.90)' can't be established.

ECDSA key fingerprint is SHA256:iP...

ECDSA key fingerprint is MD5:79:54:...

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'pi,192.168.1.90' (ECDSA) to the list of known hosts.

// On the server create your authorized_keys file if it does not exist

// inside '~/.ssh', and then 'cat' your public key to the end of the file.

// The authorized_keys file should always be protected.

$ ssh myname@pi

myname@pi's password:

$ mkdir ~/.ssh

$ chmod 700 ~/.ssh

$ cd ~/.ssh

$ touch authorized_keys

$ chmod 600 authorized_keys

$ cat /path/to/id_rsa.pub >> authorized_keys

$ tail -1 authorized_keys

ssh-rsa AAAAB3...6oLYnLx5d myname@somewhere.com

$ rm /path/to/id_rsa.pub

// logout from the server session

$ exit

// Back on the desktop verify that you can ssh into the server using only

// the key's passphrase (and not your password):

$ ssh myname@pi

Enter passphrase for key '/home/myname/.ssh/id_rsa':

Welcome to Ubuntu 22.04.2 LTS (GNU/Linux 5.15.0-1027-raspi aarch64)

...

$ exit

// If you want to only allow ssh access to your account from a specific

// computer in your LAN, than limit the hosts which are allowed by using

// the 'from=' option. Edit the authorized_keys file and prepend the

// 'from=' option like this:

$ pwd

/home/myname/.ssh

// allow from host with IP address 192.168.1.65, and from localhost:

$ nano authorized_keys

$ tail -1 authorized_keys

from="192.168.1.65" ssh-rsa AAAAB3...6oLYnLx5d myname@somewhere.comThe goal here is to start an SSH agent on your desktop, and add your key(s) to the agent.

With a few tweaks we allow other programs to inherit the ssh-agent environment variables and we avoid entering passwords and passphrases throughout the day, or until you logout, reboot or turn off your desktop.

Download the shell script named prime-ssh-keys.sh to start the agent. The script saves the environment variables in a file named:

~/.ssh-agent-info-YOUR-FULL-HOSTNAME

// Copy the shell script to your home 'bin' directory; create it if needed:

$ cd

$ mkdir bin

$ cp /path/to/prime-ssh-keys.sh ~/bin/

$ chmod 755 ~/bin/prime-ssh-keys.sh

// Run the script:

$ ~/bin/prime-ssh-keys.sh

Enter passphrase for /home/myname/.ssh/id_rsa:

Identity added: /home/myname/.ssh/id_rsa (/home/myname/.ssh/id_rsa)

// This file sets and exports environment variables for the socket and the PID:

$ cat ~/.ssh-agent-info-desktop.home

SSH_AUTH_SOCK=/tmp/ssh-XXXXXXxRlqsm/agent.21324; export SSH_AUTH_SOCK;

SSH_AGENT_PID=21325; export SSH_AGENT_PID;

// Now if you 'source' the agent file to inherit the environment variables

// you will be able to ssh into the linux server without using

// a password or passphrase:

$ . ~/.ssh-agent-info-desktop.home

$ ssh -Y pi.home

Welcome to Ubuntu 22.04.2 LTS (GNU/Linux 5.15.0-1027-raspi aarch64)

...

Last login: Thu May 11 23:56:17 2023 from desktop.home

$ In a MATE desktop setting, you can add startup programs that run as soon as you log into your desktop. You can find the startup options in:

Menus -> System -> Preferences -> Personal -> Startup Applications

Create and add the script – it will open a temporary terminal window asking for the passphrase(s) for your key(s). The terminal window can be any terminal the allows you to run a shell script as an argument. You can use mate-terminal, or if you have installed the xterm package then you can use xterm. Note that the script invokes a shell (/bin/sh is a symbolic link to /bin/bash, and starts with a shebang)

// make the 'bin' directory if it does not exist:

$ cd

$ touch ~/bin/exec-prime-ssh-keys.sh

$ chmod 755 ~/bin/exec-prime-ssh-keys.sh

$ nano ~/bin/exec-prime-ssh-keys.sh

$ cat ~/bin/exec-prime-ssh-keys.sh

#!/bin/sh

#Decide which terminal command you will use:

exec mate-terminal -e /home/myname/bin/prime-ssh-keys.sh & 2>/dev/null

#exec xterm -u8 -e /home/myname/bin/prime-ssh-keys.sh & 2>/dev/nullAnother useful tactic is to get your personal bash shell configuration file to inherit the SSH agent’s environment variables. Create a small script named ~/.bash_ssh_env which provides the variables. You can process that file in your ~/.bashrc file so that any new terminal window you launch will always inherit the variables. As well, other scripts which might need the variables can do the same.

// First create .bash_ssh_env; we 'cat' it after to show what it contains:

$ nano ~/.bash_ssh_env

$ cat ~/.bash_ssh_env

ssh_info_file=$HOME/.ssh-agent-info-`/usr/bin/hostname`

if [ -f $ssh_info_file ] ; then

. $ssh_info_file

fi

// Then source ~/.bash_ssh_env inside your .bashrc file by simply including

// the following line in ~/.bashrc:

. ~/.bash_ssh_envYou can configure some personal preferences in a configuration file named $HOME/.ssh/config

I have a few favourite settings which have solved issues I have encountered in the past (like setting KeepAlive and ServerAliveInterval). A new favourite is setting HashKnownHosts to no. I like seeing the name of hosts I have connected to in ~/.ssh/known_hosts. Debian/Ubuntu set globally the HashKnownHosts value to yes. The result is that you can no longer see hostnames or IP addresses in your known_hosts file because they have been ‘hashed’.`

This is also where you can assign customized per-host ssh key pair filenames to particular hosts.

// Create and edit your ssh config file:

$ cd ~/.ssh/

$ touch config

$ chmod 600 config

$ nano config

$ cat config

## see: man ssh_config

## ssh configuration data is parsed in the following order:

## 1. command-line options

## 2. user's configuration file (~/.ssh/config)

## 3. system-wide configuration file (/etc/ssh/ssh_config)

## Any configuration value is only changed the first time it is seen.

## Therefore this file overrides system-wide defaults.

Host *

KeepAlive yes

ServerAliveInterval 60

HashKnownHosts no

## Example private key which has a customized key name for github.com

Host github.com

IdentityFile ~/.ssh/id_rsa_githubIn case you have various devices at home that you would like to use in a Linux desktop fashion, but you do not want to change the current environment on those devices, then you can configure your home Linux server to provide that opportunity.

For all remote desktop options, first create an .Xsession file in your home directory on the server and configure it to start a MATE desktop session:

$ cd

$ nano .Xsession

$ cat .Xsession

/usr/bin/mate-sessionMost operating systems have client support for Microsoft’s Remote Desktop Protocol. On Linux there is also a software package named xrdp which can provide RDP.

Note that RDP is typically not really secure without adding some additional security features. However, from within your home network xrdp is okay.

We install xrdp, tweak the configuration a little, and restart xrdp:

$ sudo apt install xrdp

...

The following additional packages will be installed:

xorgxrdp

...

Setting up xrdp (0.9.17-2ubuntu2) ...

Generating 2048 bit rsa key...

ssl_gen_key_xrdp1 ok

saving to /etc/xrdp/rsakeys.ini

Created symlink /etc/systemd/system/multi-user.target.wants/xrdp-sesman.service ...

Created symlink /etc/systemd/system/multi-user.target.wants/xrdp.service ...

Setting up xorgxrdp (1:0.2.17-1build1) ...

...

// The configuration file is xrdp.ini; here we disable ipv6 by stipulating

// 'tcp://:3389' in the port configuration:

$ cd /etc/xrdp

$ sudo cp -p xrdp.ini xrdp.ini.orig

$ sudo nano xrdp.ini

$ diff xrdp.ini.orig xrdp.ini

23c23,24

< port=3389

---

> ;;port=3389

> port=tcp://:3389

// restart xrdp

$ sudo systemctl restart xrdpYou can test the setup from any other linux computer with remmina installed, or you can secure-shell into the Linux server with X11 forwarding using the ‘-Y’ option and run ‘remmina’ from the command-line; that is:

// Suppose that your server is named pi.home

$ ssh -Y pi.home

// Test if X11 forwarding is working:

$ xhost

access control enabled, only authorized clients can connect

SI:localuser:myname

// If remmina is not installed, then install it:

$ sudo apt install remmina

$ remmina

// Start remmina and log into the server with your username and password.

// You can save a connection profile with a custom resolution setting

// to get the best possible presentation.There are many web-based tutorials; check out XRDP on Ubuntu 22.04. This tutorial shows how to connect from Windows and from macOS as well.

X2Go is my favourite remote desktop setup since it runs seamlessly via secure shell connections and can use SSH key pairs to avoid password use. Thus I can comfortably connect as a remote desktop to remote servers across the continent, and write and test code as if I was down the hall from the remote server.

// install both the server and the client software

$ sudo apt install x2goserver x2goclient

...

Setting up x2goserver-x2goagent (4.1.0.3-5) ...

Setting up x2goserver (4.1.0.3-5) ...

Created symlink /etc/systemd/system/multi-user.target.wants/x2goserver.service

...

$ systemctl list-unit-files | grep -i x2go

x2goserver.service enabled enabledYour local desktop must have x2goclient installed so that you can start a remote X2Go desktop. From any Linux desktop running an X.org service you can start it from the command-line. This way the client inherits the SSH environment variables (you can also embed a small shell script which provides the environment in a custom application launcher).

// Bring up the application window; redirect stderr to /dev/null to ignore

// various uninteresting messages.

$ x2goclient 2>/dev/nullCreate and save the session parameters by starting a new session:

If you are using X2Go between different Linux varieties you might need to solve a few problems like font paths.

At the time of documenting this setup I found that starting the x2goclient from an older Linux system like CentOS 7 to this Ubuntu system needed a tweak in the Ubuntu Pi’s sshd configuration in /etc/ssh/sshd_config; it was fixed by adding ‘PubkeyAcceptedAlgorithms +ssh-rsa’. This was not needed between similar Ubuntu setups.

Usually your home directory on your Linux desktop is where most of your important files reside. When you shutdown your desktop then those files are inaccessible. If you also have a Linux laptop then it would be nice to have the same home directory available on both the desktop and the laptop.

Putting your home directory on the Linux server would solve this issue. Here we look at installing an NFS service for 24x7 availability. If you have no other Linux devices then skip this chapter.

First install the needed packages. The nfs-common package contains both client and server elements; the nfs-kernel-server contains the server daemons rpc.mountd and rpc.nfsd.

$ sudo apt install nfs-common nfs-kernel-server

...

The following NEW packages will be installed:

keyutils libevent-core-2.1-7 nfs-common nfs-kernel-server rpcbind

...

Setting up rpcbind (1.2.6-2build1) ...

Created symlink /etc/systemd/system/multi-user.target.wants/rpcbind.service ...

Created symlink /etc/systemd/system/sockets.target.wants/rpcbind.socket ...

...

Creating config file /etc/idmapd.conf with new version

Creating config file /etc/nfs.conf with new version

...

Created symlink /etc/systemd/system/multi-user.target.wants/nfs-client.target ...

Created symlink /etc/systemd/system/remote-fs.target.wants/nfs-client.target ...

...

Setting up nfs-kernel-server (1:2.6.1-1ubuntu1.2) ...

Created symlink /etc/systemd/system/nfs-client.target.wants/nfs-blkmap.service ...

Created symlink /etc/systemd/system/multi-user.target.wants/nfs-server.service ...

...

Creating config file /etc/exports with new version

Creating config file /etc/default/nfs-kernel-server with new version

...

// show NFS and RPC services which are now enabled:

$ systemctl list-unit-files --state=enabled | egrep 'nfs|rpc'

nfs-blkmap.service enabled enabled

nfs-server.service enabled enabled

rpcbind.service enabled enabled

rpcbind.socket enabled enabled

nfs-client.target enabled enabled

// show NFS and RPC processes currently running:

$ ps -ef |egrep 'nfs|rpc'

_rpc 271754 1 0 10:45 ? 00:00:00 /sbin/rpcbind -f -w

root 272176 2 0 10:46 ? 00:00:00 [rpciod]

root 272280 1 0 10:46 ? 00:00:00 /usr/sbin/rpc.idmapd

statd 272282 1 0 10:46 ? 00:00:00 /sbin/rpc.statd

root 272285 1 0 10:46 ? 00:00:00 /usr/sbin/nfsdcld

root 272286 1 0 10:46 ? 00:00:00 /usr/sbin/rpc.mountd

root 272294 2 0 10:46 ? 00:00:00 [nfsd]

root 272295 2 0 10:46 ? 00:00:00 [nfsd]

root 272296 2 0 10:46 ? 00:00:00 [nfsd]

root 272297 2 0 10:46 ? 00:00:00 [nfsd]

root 272298 2 0 10:46 ? 00:00:00 [nfsd]

root 272299 2 0 10:46 ? 00:00:00 [nfsd]

root 272300 2 0 10:46 ? 00:00:00 [nfsd]

root 272301 2 0 10:46 ? 00:00:00 [nfsd]There are a few files to configure. We remove IPv6 RPC services by commenting out udp6 and tcp6 from /etc/netconfig:

$ sudo cp -p /etc/netconfig /etc/netconfig.orig

$ sudo nano /etc/netconfig

$ diff /etc/netconfig.orig /etc/netconfig

15,16c15,16

< udp6 tpi_clts v inet6 udp - -

< tcp6 tpi_cots_ord v inet6 tcp - -

---

> #udp6 tpi_clts v inet6 udp - -

> #tcp6 tpi_cots_ord v inet6 tcp - -Before restarting the daemons we see various IPv6 processes running:

$ sudo lsof -i | grep rpc | grep IPv6

systemd 1 root 144u IPv6 899098 0t0 TCP :sunrpc (LISTEN)

systemd 1 root 145u IPv6 899100 0t0 UDP :sunrpc

rpcbind 271754 _rpc 6u IPv6 899098 0t0 TCP :sunrpc (LISTEN)

rpcbind 271754 _rpc 7u IPv6 899100 0t0 UDP :sunrpc

rpc.statd 272282 statd 10u IPv6 897604 0t0 UDP :59540

rpc.statd 272282 statd 11u IPv6 897608 0t0 TCP :47971 (LISTEN)

rpc.mount 272286 root 6u IPv6 900420 0t0 UDP :53821

rpc.mount 272286 root 7u IPv6 902332 0t0 TCP :45067 (LISTEN)

rpc.mount 272286 root 10u IPv6 902347 0t0 UDP :43059

rpc.mount 272286 root 11u IPv6 902352 0t0 TCP :39207 (LISTEN)

rpc.mount 272286 root 14u IPv6 902367 0t0 UDP :52374

rpc.mount 272286 root 15u IPv6 902372 0t0 TCP :52705 (LISTEN)

// restart the daemons (only the rpcbind ports still report IPv6)

$ sudo systemctl restart rpcbind nfs-server rpc-statdFinally, the NFS shares need to be published and shared. It is best to export /home so that logins on both the server and any clients use the same ‘/home’ directories. If you use other directory names you need to change the home directory path in the password file, or create a symbolic link from /home to the new directory – though we can do this, it is a bit messy.

// Edit the exports file on the NFS server:

$ sudo cp -p /etc/exports /etc/exports.orig

$ sudo nano /etc/exports

$ diff /etc/exports.orig /etc/exports

6c6,11

< #

---

>

> # Export home directories using NFSv3 syntax

> # Limit nfs access to the IP address of the client node(s)

> /home 192.168.1.65(rw,sync,no_subtree_check) \

> 192.168.1.85(rw,sync,no_subtree_check)

>Then export the share. It can then be mounted on other clients.

$ sudo exportfs -aWe will use autofs on other Linux hosts to automatically mount and unmount the home directory on demand. Note that any current directories in /home on your client will be affected, since we are going to mount the server’s /home directory using the /home directory. Anything in that directory will be hidden until you unmount the server’s version of /home. Consider migrating the content of /home to the server.

This example uses another Ubuntu host as the NFS client. We install autofs on it and then edit the automount map files as needed.

// install the autofs package

$ sudo apt install autofs

The following additional packages will be installed:

keyutils libevent-core-2.1-7 nfs-common rpcbind

...

Setting up rpcbind (1.2.6-2build1) ...

Created symlink /etc/systemd/system/multi-user.target.wants/rpcbind.service ...

Created symlink /etc/systemd/system/sockets.target.wants/rpcbind.socket ...

...

Setting up autofs (5.1.8-1ubuntu1.2) ...

Creating config file /etc/auto.master with new version

...

Setting up nfs-common (1:2.6.1-1ubuntu1.2) ...

...

Created symlink /etc/systemd/system/multi-user.target.wants/nfs-client.target ...

Created symlink /etc/systemd/system/remote-fs.target.wants/nfs-client.target ...

// If you are getting used to reading 'man' pages, then it is useful to use

// the 'man -k' option to get a list and description of all man pages available

// matching a topic:

$ man -k autofs

auto.master (5) - Master Map for automounter consulted by autofs

autofs (5) - Format of the automounter maps

autofs (8) - Service control for the automounter

autofs.conf (5) - autofs configuration

automount (8) - manage autofs mount points

// Now edit configuration file /etc/autofs.conf and get rid of the 'amd' section

// Keep things simple.

$ cd /etc

$ sudo cp -p autofs.conf autofs.conf.orig

$ sudo nano autofs.conf

$ diff autofs.conf.orig autofs.conf

404c404

< [ amd ]

---

> #[ amd ]

410c410

< dismount_interval = 300

---

> #dismount_interval = 300

// Edit configuration file /etc/auto.master

// We comment out the '+auto.master' line and append the auto.home configuration

$ sudo cp -p auto.master auto.master.orig

$ sudo nano auto.master

$ diff auto.master.orig auto.master

22c22

< +dir:/etc/auto.master.d

---

> #+dir:/etc/auto.master.d

38c38,43

< +auto.master

---

> #+auto.master

>

> ## This is a direct mountpoint for NFS-provided home directories ..

> /- /etc/auto.home --timeout 300

>

// We create /etc/auto.home; every regular user you create also needs

// an entry in this file.

$ sudo nano /etc/auto.home

$ cat /etc/auto.home

## For every new user you must add their home directory entry to this file.

## The IP address belongs to the Linux Server of course...

/home/myname \

-rw,hard,intr,rsize=32768,wsize=32768,retrans=2,timeo=600,tcp,nfsvers=3 \

192.168.1.90:/home/mynameNow, you would normally restart autofs - but do not do this immediately:

// restart autofs:

$ sudo systemctl restart autofsConsider your impending ‘chicken-and-egg’ issue regarding being a regular user logged into your home directory while you restart autofs, causing your current home directory to disappear. There is some help on this topic in the appendix. Perhaps the easiest solution if you do not want to fiddle with changes to root access is to reboot your client device. If there were no mistakes in your configuration changes then when the client is back up again you should have a home directory that is served by your home server.

$ pwd

/home/myname

$ df -h .

Filesystem Size Used Avail Use% Mounted on

192.168.1.90:/home/myname 50G 464M 50G 1% /home/mynameOnce you NFS-mount your home directory on your clients, then if for any reason your server is down you will not be able to log in as a regular user. This would be a rare condition, but it would affect your ability to work from your other devices. You need to be able to login locally so that you can address the problem.

You could give your local root user a good password, or you could create another local user on each device and give them administrative rights so that they can use sudo for root access locally:

// Suppose the other user is called 'helper'

// We will give the helper user another home directory

$ sudo mkdir /var/home

$ sudo groupadd -g 1100 helper

$ sudo useradd -u 1100 -g helper -m -d /var/home/helper -c 'Helper Account' helper

$ sudo usermod -aG sudo helper

$ grep sudo /etc/group

sudo:x:27:myname,helper

// Don't forget to set a password - presumably it would be the same as your password..

$ sudo passwd helper

[sudo] password for myname:

New password:

Retype new password:

passwd: password updated successfullyThe main benefit of letting the automounter unmount your directory when you log out is that you can easily do maintenance on the server without disturbing the state of your clients - that is, your desktop or laptop or other devices which might still be turned on.

When you log out of a client then the automounter should unmount your directory at the configured time limit - in our case - 5 minutes. But there are some misbehaved software which insists on staying around, and you might want to watch out for that software and investigate what you can do with it. Indeed, your ssh-agent (if you are using it) is not going to go away unless you kill it before logging out. This process alone will keep your home directory mounted on the client.

One solution is to let the display manager clean up for you. If you are using the lightdm display manager then you need to make a root-owned script on your Linux client that will do the job:

// Remember, this is done on your Linux client desktop, not on the server:

$ cd /etc/lightdm

$ sudo /bin/bash

# touch lightdm.conf

# nano lightdm.conf

# cat lightdm.conf

[Seat:*]

session-cleanup-script=/root/bin/clean-up-user.sh

# cd /root

// make a bin directory if you don't have one

# mkdir bin

# touch clean-up-user.sh

# chmod 755 clean-up-user.sh

# nano clean-up-user.sh

# cat clean-up-user.sh

#!/bin/sh

## This script is called by the lightdm window manager. It finds processes

## owned by users on logout, and kills them. Don't use this script if your

## users need to leave any of these processes running on logout.

procs=$(ps -ef | grep -v grep | grep '/lib/systemd/systemd --user' | grep -v '^root')

echo "$procs" | while read user process rest ; do

#echo debug: user is $user, proc is $process

if [ "$user" != "" ] ; then

#echo debug: kill $process

kill $process

fi

done

procs=$(ps -ef | grep -v grep | grep ssh-agent | grep -v '^root')

echo "$procs" | while read user process rest ; do

#echo debug: user is $user, proc is $process

if [ "$user" != "" ] ; then

#echo debug: kill $process

kill $process

fi

doneIf you are using an X2go remote desktop session then you will not go through the display manager. Therefore the above script will not be called. You can always create a copy of this script in your own ~/bin/ directory and run it from a terminal session just before logging out.